In my last post, I outlined what I think are the twelve key constraints you need to think about if you are going to build high quality software that people want to use. As I mentioned, thinking through this needn’t be a mind sapping endeavour, and for some things you may just decide it’s not important right now. Here’s the rub though; once you’ve decided something is important enough to define as a constraint on your system you’d better think about how you’re going to test it. If not, you may as well kiss the requirement goodbye as eventually people will stop paying attention to it. Here’s how I would go about testing each of those twelve constraints:

The trouble with typical non functional requirements

“it’s not fast enough…” That was the response I always had with my client / UX lead when I was developing when I asked them what they thought of the application. The trouble was, the client was used to seeing a vision demo which had canned data and used smoke and mirrors to mimic the application without having to worry about anything like network latency or the size of data. During development, the software you are building will go through various degrees of speed and performance as you strike a balance between functionality, flexibility and speed. In order for you not to get the shaft as a much harried developer, you need to arrive at an understanding with the client about what performance is acceptable and in what conditions.

Make your requirements SMART

First off, the creation and agreement of how the application needs to behave has to be a conversation between UX, the business and the technical team; you all fail if they are crappy, and you all need to sign up to them. What you need to do is come away with a clearly defined set of goals for ‘quality’ of the application. Like any other requirement, they should be clear, simple and understandable. A great way to check this is to use the SMART principle first written up by Mike Mannion and Barry Keepence in a 1995 paper on this. Basically they suggest that requirements should be:

Specific - Clear, consistent and simple

Measurable – If you can’t measure it, you won’t know when you’ve acheived it

Attainable – The requirement must be something that the team thinks is acheivable given what they know

Realizable – Can it actually be done given the constraints you know about the way the project is being executed (is there enough money / time to acheive this goal for example)

Traceable – It is important to understand why a requirement exists so that it can be justified (and to question if it is still needed if the original driver or assumption changes)

A common structure for defining the requirements

I did quite a bit of hunting looking for a way that I could describe requirements as diverse as conformance to coding standards and ability to cope with year-on-year growth needs. Fortunately I found this post by Ryan Shriver that talks about how he uses a method by Tom Gilb

[requirements] can 1) be specified numerically and 2) systems can be engineered to meet specific levels of performance. Qualities can be specified using a minimum of six attributes:

Name: A unique name for the quality

Scale: “What” you’ll measure (aka the units of measure, such as seconds)

Meter: “How” you’ll measure (aka the device you’ll use to obtain measurements)

Target: The level of performance you’re aiming to achieve (how good it can be)

Constraint: The level of performance you’re trying to avoid (how bad it can be)

Benchmark: Your current level of performance.

Some sample requirements and reports

I think it’s best to take a look at a few examples from my last post on essential non functional requirements for building quality software. What I’m showing here is how I would specify test and report the constraint:

Processing times

First off a relatively simple one. Measuring processing time is a matter of allowing the system to log out how long it took to do stuff.

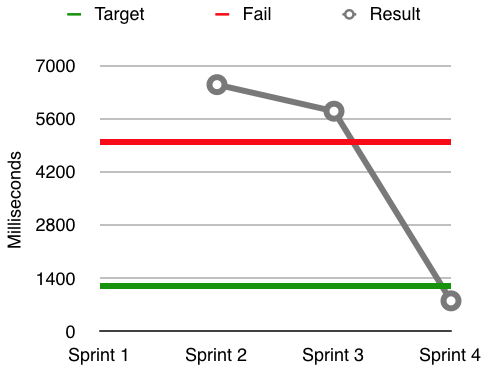

Name: Parsing of a large search result

Scale: Elapsed milliseconds from receiving an XML response in the client containing 1000 results

Meter: Log messages with a defined signature that output the parsing time in milliseconds

Target: <1200ms (i.e. 1200 ms or less is a target time)

Constraint: >5000 ms (i.e. more than 5000 ms is an epic fail)

Benchmark: Build 155; test environment 3/21/11: 4500ms

Reporting this over time could give a chart something like this:

Architectural standards

This one seems a bit more tricky at first glance. How can you measure how compliant a codebase is against architectural standards? Fortunately there are some great static analysis tools out there that allow you to inspect your code for potential problems. We have used PMD and FlexPMD in our projects. You can write your own rules or draw from common rulesets. Using this we are able to reduce things like architectural conformance to something quantifiable and thus testable:

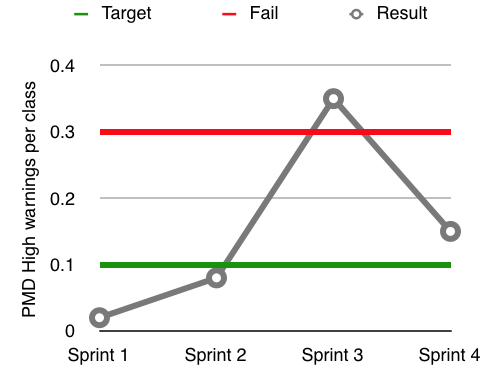

Name: Conformance to standard Flex architectural best practices

Scale: # of PMD high warnings from the ‘cairngorm’ and ‘architecture’ PMD rulesets

Meter: Flex PMD warnings reported by the nightly Hudson Jenkins build divided by the number of classes

Target: <1200ms (i.e. 1200 ms or less is a target time)

Constraint: >5000 ms (i.e. more than 5000 ms is an epic fail)

Benchmark: Build 155; test environment 3/21/11: 4500ms

You can see here how architectural standards started to lapse in sprint 3. The good news was that it was caught and addressed. This sort of conversation is far easier to have with your team when you have data to support the conversation.

Year on year growth requirements

Last one to demonstrate some breadth. If you want to test growth requirements, you are basically going to have to think about what your likely point of failure is going to be in your other requirements as your user base grows. It could be storage needs or response times, or more likely it’s peak throughput. In the example below, we increase the standard load on the system until the throughput test fails. We use that to predict how much runway the current system has before breaking down.

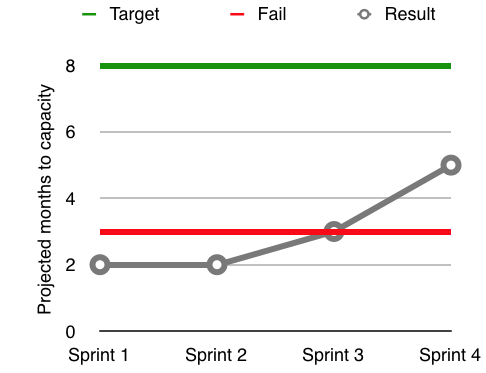

Name: 60% year-on-year growth

Scale: Number of months for system to hit peak throughput failure

Meter: Standard Load Scenario increased by 5% per month and measured in each scenario until failure

Target: 8 months

Constraint: 3 months

Benchmark: Current application; 3/21/11; Production environment; 2 months

Simplicity and Consistency

This pretty much wraps it up for non functional requirements (and FWIW, I agree with Mike Cohn that we should be calling these things constraints). Non functional requirements can be a gnarly area as they attempt to describe areas of the software most prone to gradual decay and entropy, but I think these are the areas that are important to test if you are serious about building decent quality software. What I like about the approach is that the output reports are nice, clean and simple. They allow you to report on really varied requirements in a consistent manner and allow you to have a data driven conversation if things start to slide.